Component-Level LLM Evaluation

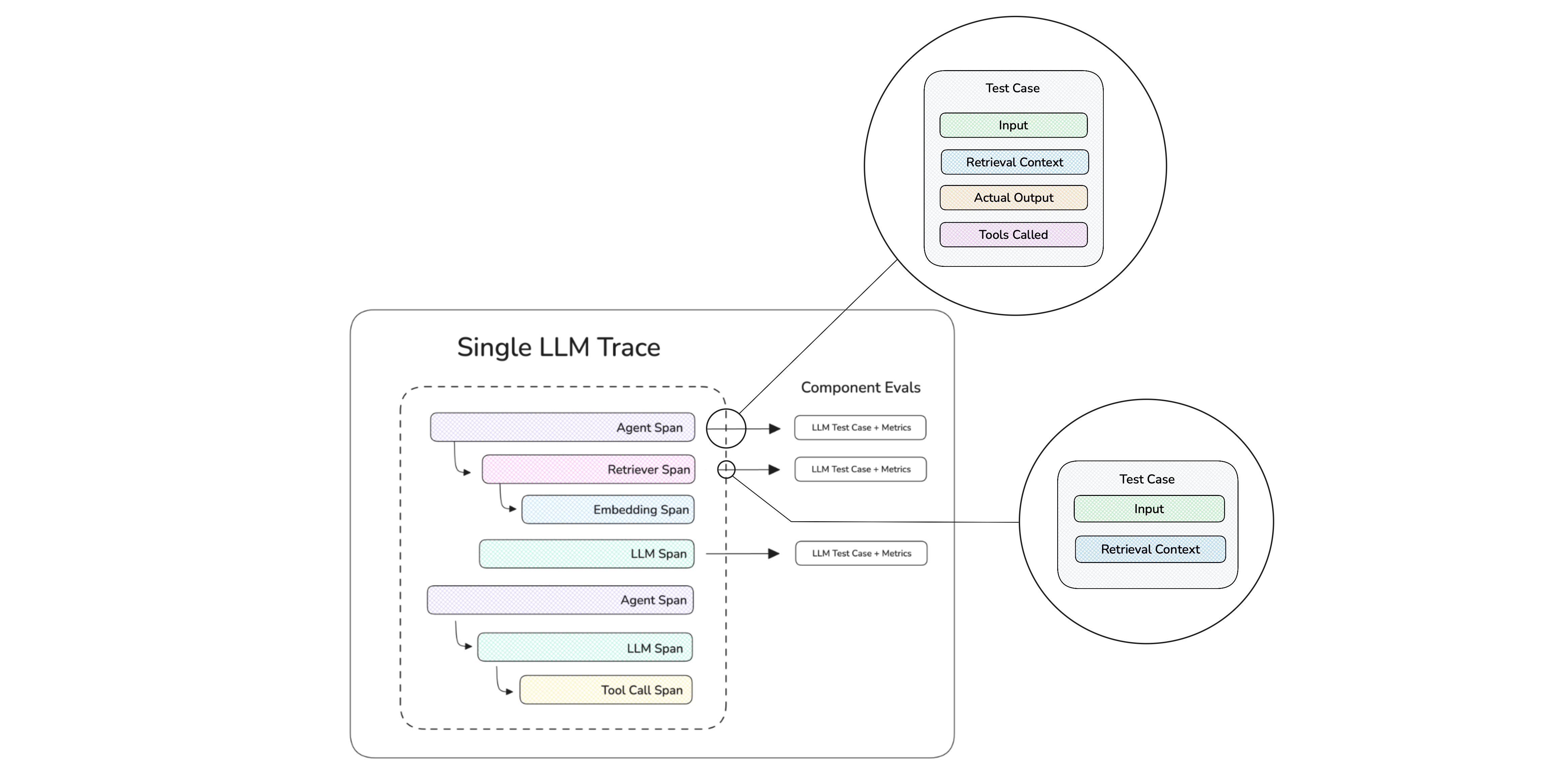

Component-level evaluation assess individual units of LLM interaction between internal components such as retrievers, tool calls, LLM generations, or even agents interacting with other agents, rather than treating the LLM app as a black box.

Component-level evaluation is currently only supported for single-turn evals.

When should you run Component-Level evaluations?

In end-to-end evaluation, your LLM application is treated as a black-box and evaluation is encapsulated by the overall system inputs and outputs in the form of an LLMTestCase.

If your application has nested components or a structure that a simple LLMTestCase can't easily handle, component-level evaluation allows you to apply different metrics to different components in your LLM application.

Common use cases that are suitable for component-level evaluation include (not inclusive):

- Chatbots/conversational agents

- Autonomous agents

- Text-SQL

- Code generation

- etc.

The trend you'll notice is use cases that are more complex in architecture are more suited for component-level evaluation.

How Does It Work?

Once your LLM application is decorated with @observe, you'll be able to provide it as an observed_callback and invoke it with Goldens to create a list of test cases within your @observe decorated spans. These test cases are then evaluated using the respective metrics to create a test run.

- Evals on Traces

- Evals on Spans

Evals on traces are end-to-end evaluations, where a single LLM interaction is being evaluated.

Spans make up a trace and evals on spans represents component-level evaluations, where individual components in your LLM app are being evaluated.

Component-level evaluations generates LLM traces, which are only visible on Confident AI. To view them, login here or run:

deepeval login

Setup Test Environment

Setup LLM Tracing and metrics

For component-level testing you need to setup LLM tracing to you application. You can learn about how to setup LLM tracing here.

from typing import List

from openai import OpenAI

from deepeval.tracing import observe, update_current_span

from deepeval.test_case import LLMTestCase

from deepeval.metrics import AnswerRelevancyMetric

def your_llm_app(input: str):

def retriever(input: str):

return ["Hardcoded", "text", "chunks", "from", "vectordb"]

@observe(metrics=[AnswerRelevancyMetric()])

def generator(input: str, retrieved_chunks: List[str]):

res = OpenAI().chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "\n\n".join(retrieved_chunks) + "\n\nQuestion: " + input}]

).choices[0].message.content

# Create test case at runtime

update_current_span(test_case=LLMTestCase(input=input, actual_output=res))

return res

return generator(input, retriever(input))

print(your_llm_app("How are you?"))

In the example above, we:

- Deocrated different functions in our application with

@observe, which allowsdeepevalto map out how components relate to one another. - Supplied the

AnswerRelevancyMetrictometricsin thegenerator, which tellsdeepevalthat component should be evaluated - Constructed test cases at runtime using

update_current_span

You can learn more about LLM tracing in this section.

What is LLM tracing?

The process of adding the @observe decorating in your app is known as tracing, which you can learn about in the tracing section.

An @observe decorator creates a span, and the overall collection of spans is called a trace.

As you'll see in the example below, tracing with deepeval's @observe means we don't have to return variables such as the retrieval_context in awkward places just to create end-to-end LLMTestCases, as previously seen in end-to-end evaluation

Create a dataset

Datasets in deepeval allow you to store Goldens, which are like a precursors to test cases. They allow you to create test case dynamically during evaluation time by calling your LLM application. Here's how you can create goldens:

- Single-Turn

- Multi-Turn

from deepeval.dataset import Golden

goldens=[

Golden(input="What is your name?"),

Golden(input="Choose a number between 1 to 100"),

]

from deepeval.dataset import ConversationalGolden

goldens = [

ConversationalGolden(

scenario="Andy Byron wants to purchase a VIP ticket to a Coldplay concert.",

expected_outcome="Successful purchase of a ticket.",

user_description="Andy Byron is the CEO of Astronomer.",

)

]

You can also generate synthetic goldens automatically using the Synthesizer. Learn more here. You can now use these goldens to create an evaluation dataset that can be stored and loaded them anytime.

- Confident AI

- Locally as CSV

- Locally as JSON

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset(goldens)

dataset.push(alias="My dataset")

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset(goldens)

dataset.save_as(

file_type="csv",

directory="./example"

)

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset(goldens)

dataset.save_as(

file_type="json",

directory="./example"

)

✅ Done. You can now use this dataset anywhere to run your evaluations automatically by looping over them and generating test cases.

Run Component-Level Evals

You can use the dataset you just created and invoke your @observe decorated LLM application within the loop of evals_iterator() to run component-level evals.

Load your dataset

deepeval offers support for loading datasets stored in JSON files, CSV files, and hugging face datasets into an EvaluationDataset as either test cases or goldens.

- Confident AI

- From CSV

- From JSON

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset()

dataset.pull(alias="My Evals Dataset")

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset()

dataset.add_goldens_from_csv_file(

# file_path is the absolute path to your .csv file

file_path="example.csv",

input_col_name="query"

)

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset()

dataset.add_goldens_from_json_file(

# file_path is the absolute path to your .json file

file_path="example.json",

input_key_name="query"

)

Run evals using evals iterator

You can use the dataset's evals_iterator to run component-level evals by simply calling your LLM app within the loop for all goldens.

from somewhere import your_llm_app # Replace with your LLM app

from deepeval.dataset import EvaluationDataset

dataset = EvaluationDataset()

dataset.pull(alias="My Evals Dataset")

for golden in dataset.evals_iterator():

# Invoke your LLM app

your_llm_app(golden.input)

There are SIX optional parameters when using the evals_iterator():

- [Optional]

metrics: a list ofBaseMetricthat allows you to run end-to-end evals for your traces. - [Optional]

identifier: a string that allows you to better identify your test run on Confident AI. - [Optional]

async_config: an instance of typeAsyncConfigthat allows you to customize the degree concurrency during evaluation. Defaulted to the defaultAsyncConfigvalues. - [Optional]

display_config:an instance of typeDisplayConfigthat allows you to customize what is displayed to the console during evaluation. Defaulted to the defaultDisplayConfigvalues. - [Optional]

error_config: an instance of typeErrorConfigthat allows you to customize how to handle errors during evaluation. Defaulted to the defaultErrorConfigvalues. - [Optional]

cache_config: an instance of typeCacheConfigthat allows you to customize the caching behavior during evaluation. Defaulted to the defaultCacheConfigvalues.

We highly recommend setting up Confident AI with your deepeval evaluations to observe your spans and traces evals in a nice intuitive UI like this:

If you want to run component-level evaluations in CI/CD piplines, click here.