The LLM Evaluation Framework

$ used by some of the world's leading AI companies, DeepEval enables you to build reliable evaluation pipelines to test any AI system_

Native integration with Pytest, that fits right in your CI workflow.

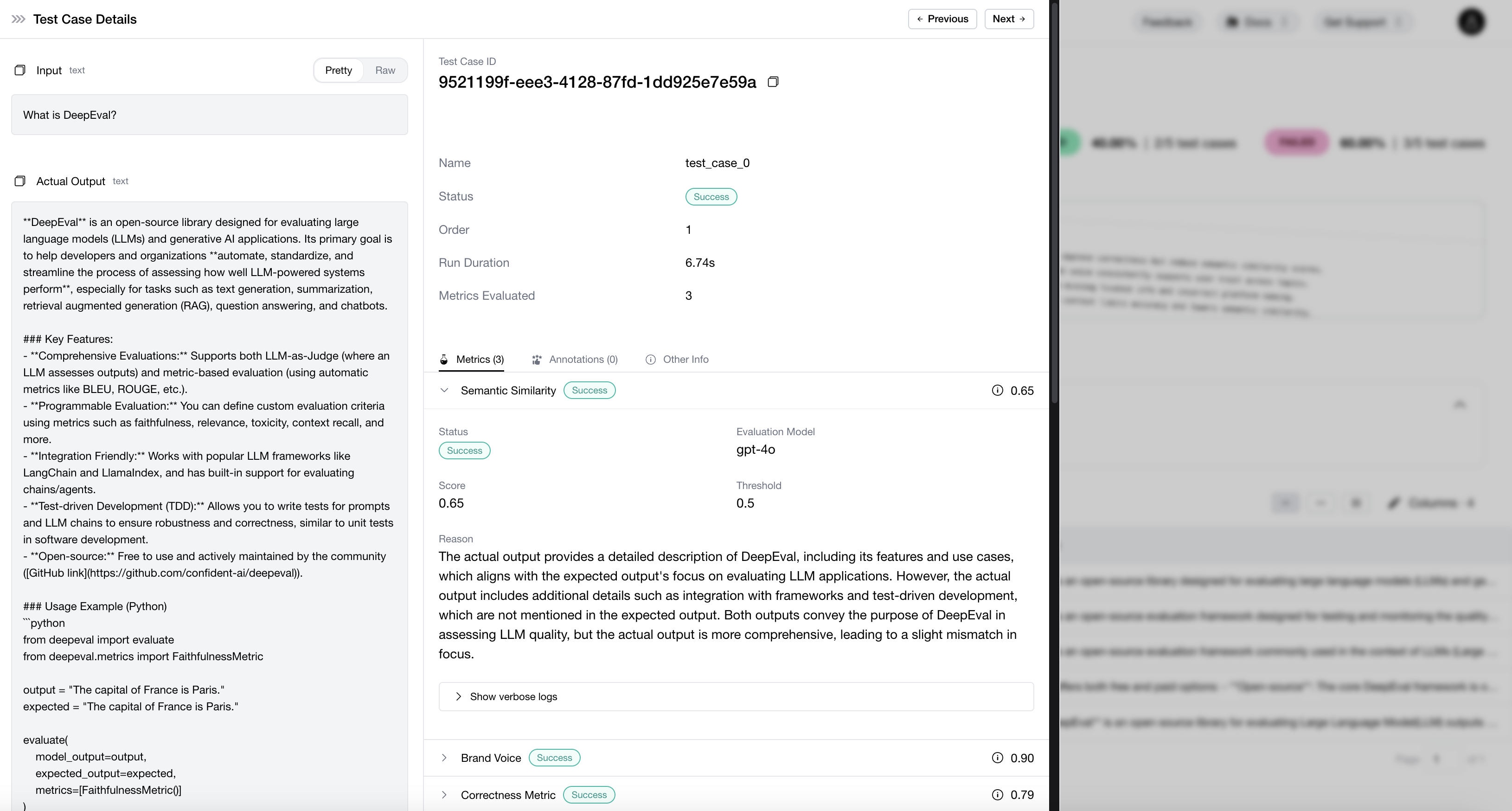

50+ research-backed metrics, including custom G-Eval and deterministic metrics.

Covers any use cases, any system architecture, including multi-turn.

Evaluate text, images, and audio with built-in multi-modal test cases.

No test data? No problem. Generate synthetic data and simulate conversations.

No need to manually tweak prompts. DeepEval automatically optimizes prompts for you.

Criteria-based, chain-of-thought reasoning for nuanced, subjective scoring via form-filling paradigms.

A tree-based, directed acyclic graph approach for evaluating objective multi-step conditional scoring.

Question-Answer Generation for equation-based scoring based on close-ended questions.

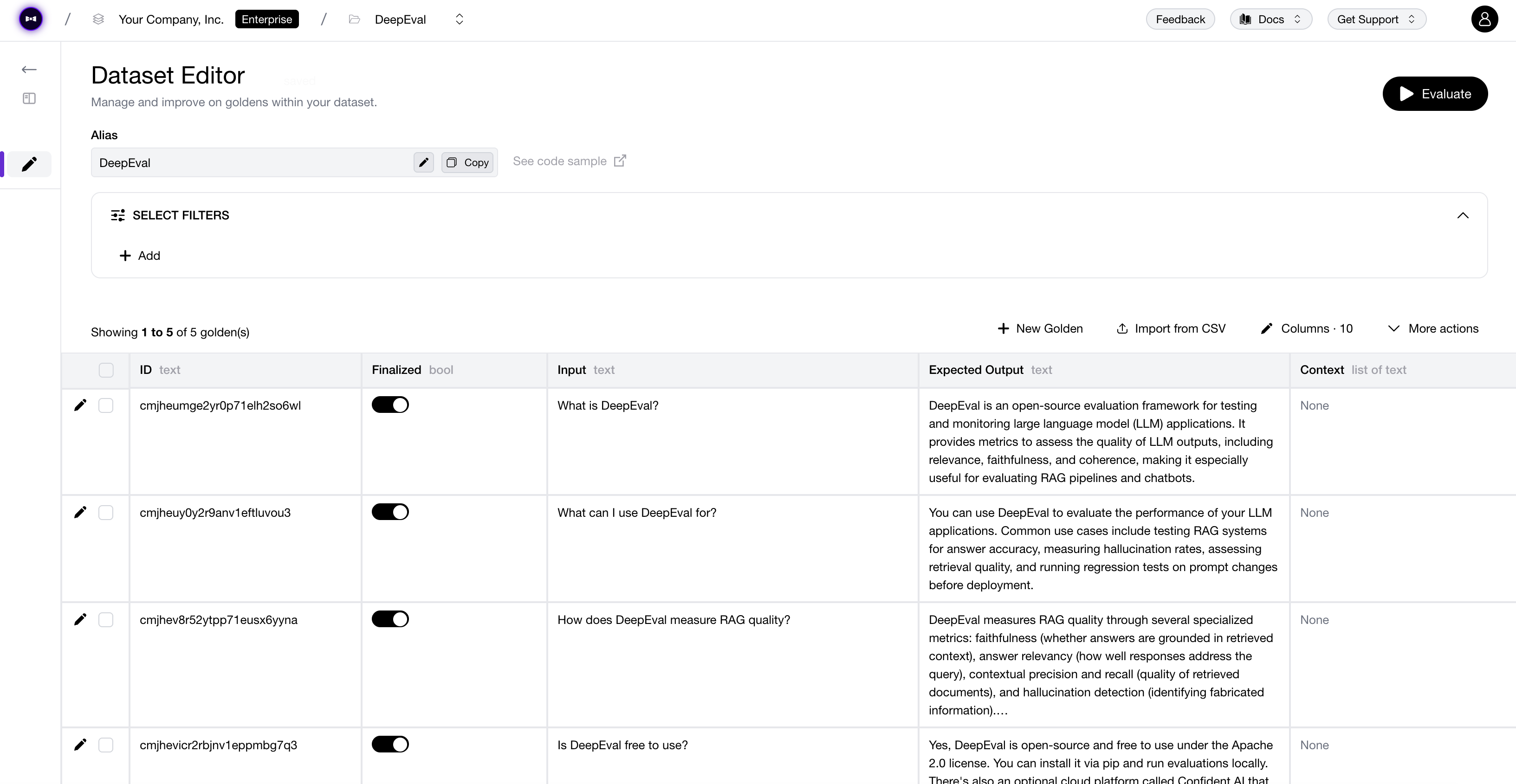

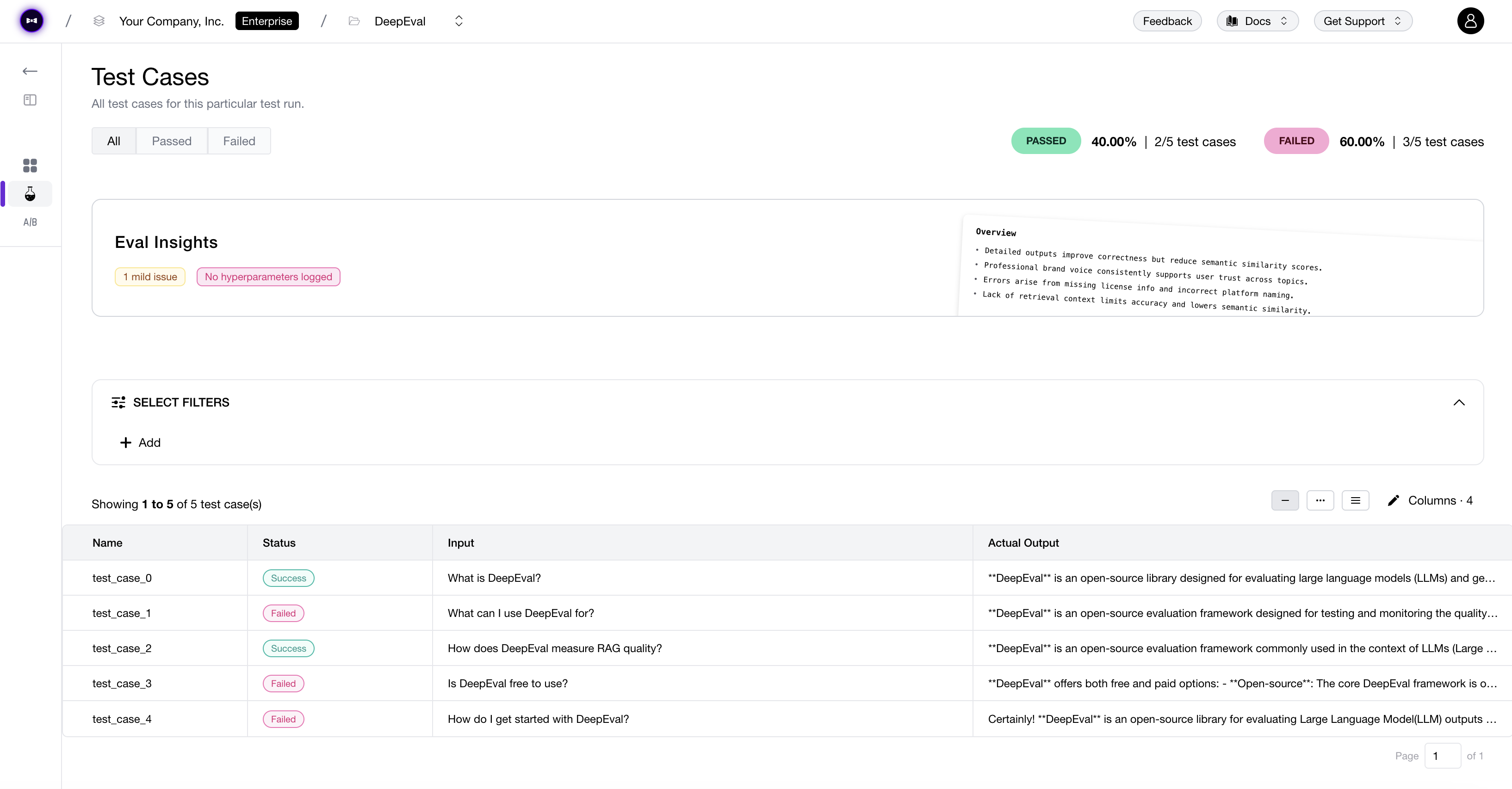

By the authors of DeepEval, Confident AI is a cloud LLM evaluation platform. It allows you to use DeepEval for team-wide, collaborative AI testing.